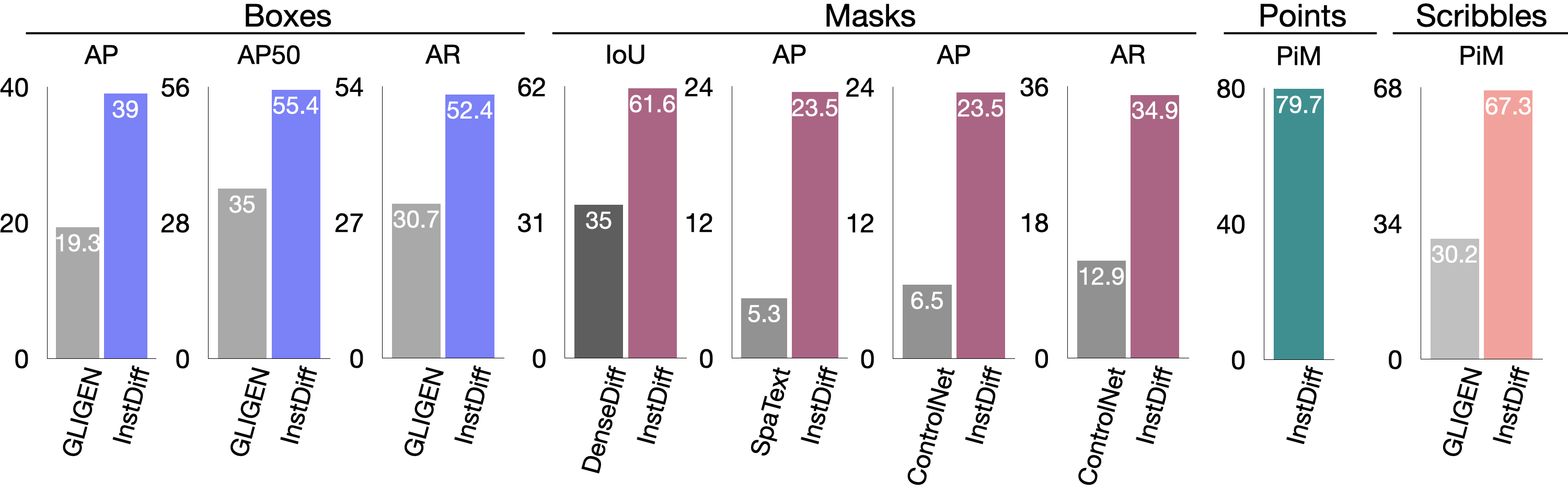

Text-to-image diffusion models produce high quality images but do not offer control over individual instances in the image. We introduce InstanceDiffusion that adds precise instance-level control to text-to-image diffusion models. InstanceDiffusion supports free-form language conditions per instance and allows flexible ways to specify instance locations such as simple single points, scribbles, bounding boxes or intricate instance segmentation masks, and combinations thereof. We propose three major changes to text-to-image models that enable precise instance-level control. Our UniFusion block enables instance-level conditions for text-to-image models, the ScaleU block improves image fidelity, and our Multi-instance Sampler improves generations for multiple instances. InstanceDiffusion significantly surpasses specialized state-of-the-art models for each location condition. Notably, on the COCO dataset, we outperform previous state-of-the-art by 20.4% AP50box for box inputs, and 25.4% IoU for mask inputs.

We propose (i) UniFusion, which projects various forms of instance-level conditions into the same feature space, and injects the instance-level layout and descriptions into the visual tokens; (ii) ScaleU, which re-calibrates the main features and the low-frequency components within the skip connection features of UNet, enhancing the model's ability to precisely adhere to the specified layout conditions; (iii) Multi-instance Sampler, which reduces information leakage and confusion between the conditions on multiple instances (text+layout).

InstanceDiffusion can also support iterative image generation. Using the identical initial noise and image caption, InstanceDiffusion can progressively add new instances (like a bouquet of flowers and a candle) or edit (replace, move or resize) previously generated instances, while minimally altering the pre-generated instances.

Using a unified framework, InstanceDiffusion significantly surpasses specialized state-of-the-art models for each location condition. We also provide a new set of evaluation benchmarks and metrics for measuring the performance of point/scribble grounded image generation.

@misc{wang2024instancediffusion,

title={InstanceDiffusion: Instance-level Control for Image Generation},

author={Xudong Wang and Trevor Darrell and Sai Saketh Rambhatla and Rohit Girdhar and Ishan Misra},

year={2024},

eprint={2402.03290},

archivePrefix={arXiv},

primaryClass={cs.CV}

}