Projects

2025

Robust Gymnasium: A Unified Modular Benchmark for Robust Reinforcement Learning

This benchmark aims to advance robust reinforcement learning for real-world applications and domain adaptation. The benchmark provides a comprehensive set of tasks that cover various robustness requirements in the face of uncertainty on state, action, reward and environmental dynamics, and span diverse applications including control, robot manipulations, dexterous hand, and so on.

2024

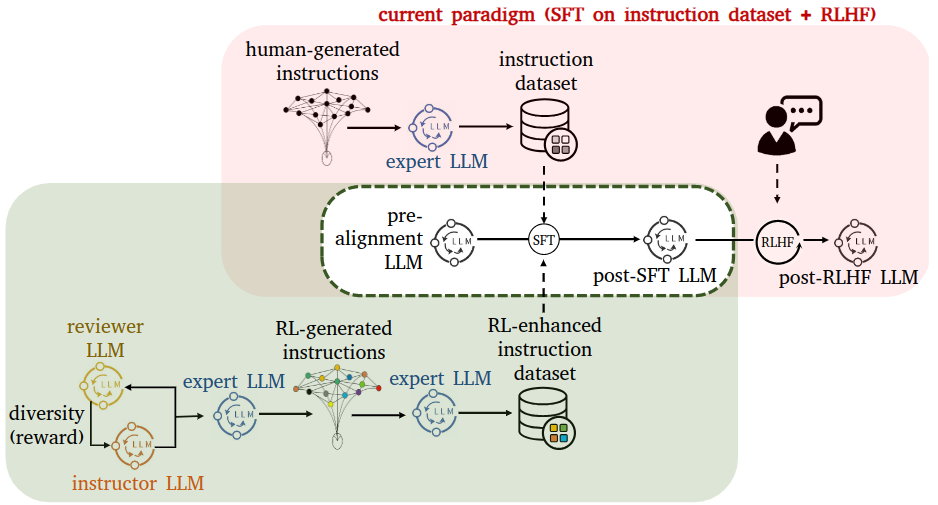

TeaMs-RL: Teaching LLMs to Teach Themselves Better Instructions via Reinforcement Learning

This work presents TeaMs-RL, a novel method that leverages Reinforcement Learning to directly generate instruction datasets for fine-tuning LLMs. By minimizing reliance on human feedback and external queries, TeaMS-RL enhances data quality, privacy, and model capabilities, making it a valuable approach for foundation model post-training.

2024

Safe Multi-Agent Reinforcement Learning with Bilevel Optimization in Autonomous Driving

This study introduces a safe MARL method based on a Stackelberg model with bi-level optimization, featuring two algorithms, CSQ and CS-MADDPG, for autonomous driving applications. Experimental results demonstrate their superior safety and performance compared to strong MARL baselines in challenging driving scenarios.

2023

Safe Multi-Agent Reinforcement Learning for Multi-Robot Control

We investigate safe MARL for multi-robot control on cooperative tasks, in which each individual robot has to not only meet its own safety constraints while maximising their reward, but also consider those of others to guarantee safe team behaviours