Natural Image Segmentation via Lossy Compression

|

|

|

Contents |

Abstract

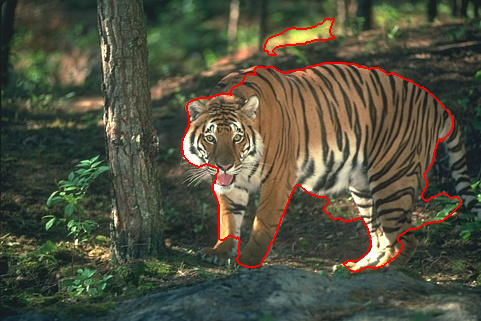

We present a novel algorithm for segmentation of natural images that harnesses the principle of minimum description length (MDL). Our method is based on observations that a homogeneously textured region of a natural image can be well modeled by a Gaussian distribution and the region boundary can be effectively coded by an adaptive chain code. The optimal segmentation of an image is the one that gives the shortest coding length for encoding all textures and boundaries in the image, and is obtained via an agglomerative clustering process applied to a hierarchy of decreasing window sizes as multi-scale texture features. The optimal segmentation also provides an accurate estimate of the overall coding length and hence the true entropy of the image. We test our algorithm on the publicly available Berkeley Segmentation Dataset. It achieves state-of-the-art segmentation results compared to other existing methods.

Adaptive Texture and Boundary Encoding

Constructing Texture Features

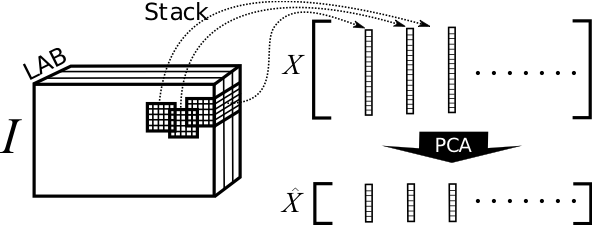

For each pixel, we apply a w × w cut-off window around the pixel across the three color channels, and stack the color values inside the window in a vector form. For ease of computation, we reduce the dimensionality of these features by projecting the set of all features X onto their first D principal components.

Adaptive Texture Encoding

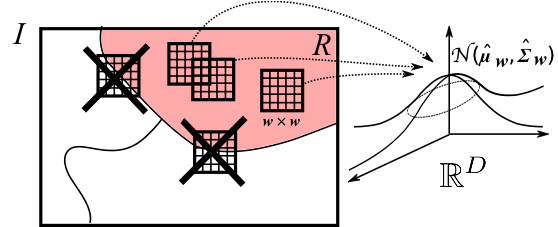

The coding length of a region is uniquely determined by the mean and covariance (μ, Σ ) of the texture windows in the region. To estimate (μ, Σ ) empirically we need to exclude the windows that cross the boundary of R:

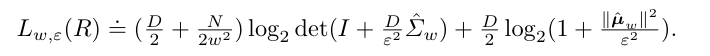

We now describe encoding these texture vectors based on the lossy MDL principle. For a region R containing N pixels, a good approximation for the optimal coding length of R up to distortion ε2 is:

Adaptive Boundary Encoding

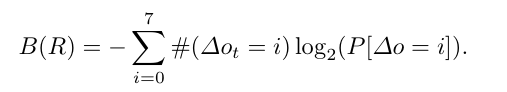

In the Freeman chain code coding scheme, the orientation of an edge otis quantized along eight discrete directions. The difference chain code between two consecutive edges ot and ot+1 is Δot = mod(ot - ot+1, 8). Given the prior distribution P[Δo] of difference chain codes in natural images, the optimal coding of the boundary B(R) is given by:

Segmentation by Minimizing Coding Length

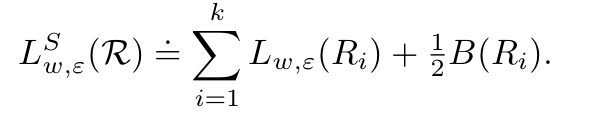

The total coding length of an image I with respect to a segmentation with non-overlapping regions R1,...,Rk is:

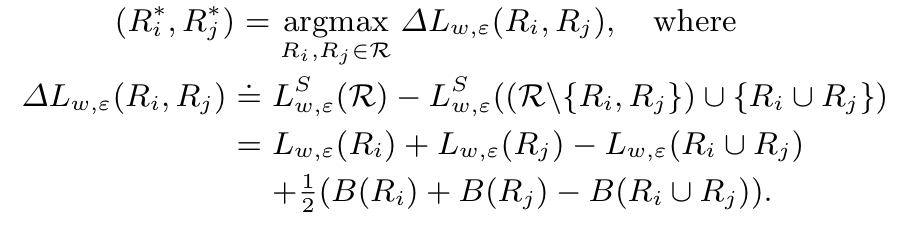

The optimal segmentation of I minimizes this total coding length function, and can often be found through the following agglomerative process. Given an oversegmentation of the image, at each iteration, we find the pair of regions Ri and Rj that maximally decrease the total coding length if merged:

To model the spatial locality of textures, we further construct a region adjacency graph where each vertex vi corresponds to a region Ri and the edge eij is present if and only if regions Ri and Rj are adjacent in the image. To perform image segmentation, we simply apply a constrained version of the agglomerative procedure - only merging regions that are adjacent in the image.

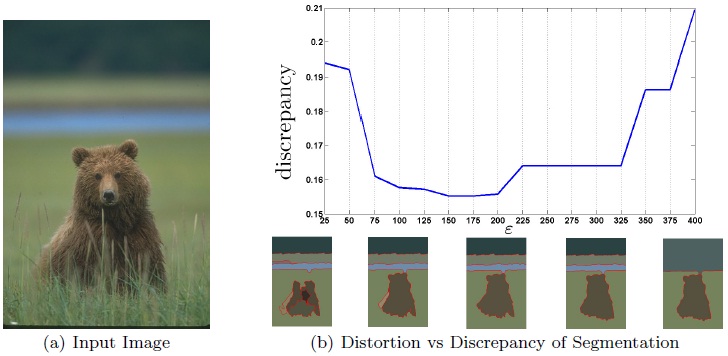

Automatic Choice of Distortion

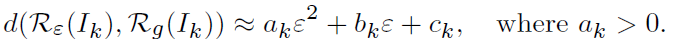

The opitmality of the distortion ε for each image is measured by the segmentation that best matches with human perception. We learn to predict ε from a set of training images {Ik} and their corresponding ground truth {Rg(Ik)}. Consider a discrepancy function d(Rε (Ik),Rg(Ik)) which measures the difference between the output and the ground truth segmentation. For each image, as ε deviates from its optimal value, it leads to over or under segmentation. Thus, we approximate d by a convex quadratic function.

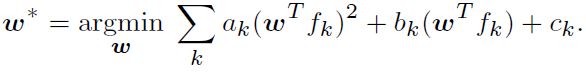

Denote some feature vector extracted from image Ik by fk . The relationship between ε and fk is model by a linear function ε=wTfk , where w is a parameter vector. The model and the quadratic approximation of d lead to the following unconstrained convex program which as closed-form solution.

Results on Public Image Database

We report the results of our image segmentation on all images in the Berkeley Segmentation Dataset. For each result, the top image is PRI-Optimized and the bottom image is VOI-Optimized segmentation. Please click on the link below to see results.

For the dataset itself, please refer to the link below:

MATLAB Software

Texture and Boundary Encoding-based Segmentation 1.0

Installation

This texture segmentation algorithm uses the superpixel code of Mori et al., which includes some c files that need to be compiled. To do this open MATLAB and then run the following commands:> cd superpixels/yu_imncut > mex csparse.c > mex ic.c > mex imnb.c > mex parmatV.c > mex spmd_imncut.c

Testing

To test our texture segmentation algorithm, run the following command in MATLAB:> test_texture_seg

This will run TBES on an example image from the Berkeley Segmentation Dataset, compute the probabilistic Rand index and variation of information segmentation metrics (in comparison with human results), and display the segmentation results. For information on computing the precision and recall curve, please refer to the Berkeley Segmentation Dataset.

Copyright

Authors: Shankar Rao, Hossein Mobahi, Allen YangContact: Hossein Mobahi: hmobahi2 AT illinois DOT EDU

(c) Copyright. University of Illinois, University of California, 2009.

Notice: The packages should NOT be used for any commercial purposes without direct consent of the authors.

Publications

- Segmentation of Natural Images by Texture and Boundary Compression. Hossein Mobahi, Shankar Rao, Allen Yang, Shankar Sastry and Yi Ma. Accepted for Publication in the Int. Journal of Computer Vision (IJCV), March 2011.

- Natural Image Segmentation with Adaptive Texture and Boundary Encoding. Shankar Rao, Hossein Mobahi, Allen Yang, Shankar Sastry and Yi Ma. Proceedings of the Asian Conference on Computer Vision (ACCV) 2009, H. Zha, R.-i. Taniguchi, and S. Maybank (Eds.), Part I, LNCS 5994, pp. 135--146, Springer. (Winner of the Best Student Paper Award (Sang Uk Lee Award)).

- Natural Image Segmentation with Adaptive Texture and Boundary Encoding. Shankar Rao, Hossein Mobahi, Allen Yang, Shankar Sastry and Yi Ma. UIUC Technical Report UILU-ENG-09-2211 DC-244, April 2009

- Unsupervised Segmentation of Natural Images via Lossy Data Compression. Allen Yang, John Wright, Shankar Sastry and Yi Ma. Computer Vision and Image Understanding, 2008

In the News

- Illinois researchers win Best Student Paper for research advancing computer vision. Web News of Coordinated Science Laboratory (CSL).

- PhD Student Wins Best Paper Award at ACCV 09. Web News of Department of Computer Science.

References

- Segmentation of Multivariate Mixed Data via Lossy Coding and Compression. Yi Ma, Harm Derksen, Wei Hong and John Wright. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2007.

- Recovering human body configurations: combining segmentation and recognition. Greg Mori, Xiaofeng Ren, Alexei A. Efros and Jitendra Malik. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2004.

- A Database of Human Segmented Natural Images and its Application to Evaluating Segmentation Algorithms and Measuring Ecological Statistics. David Martin, Charless Fowlkes, Doron Tal and Jitendra Malik. Proceedings of the International Conference on Computer Vision, 2001.

- Texton-boost: Joint appearance, shape and context modeling for multi-class object recognition and segmentation. Jamie Shotton, John Winn, Carsten Rother and Antonio Crimi. Proceedings of the European Conference on Computer Vision, 2006.

Comments? Questions? Contact Hossein Mobahi at: hmobahi2 AT illinois DOT EDU