|

|

|

|

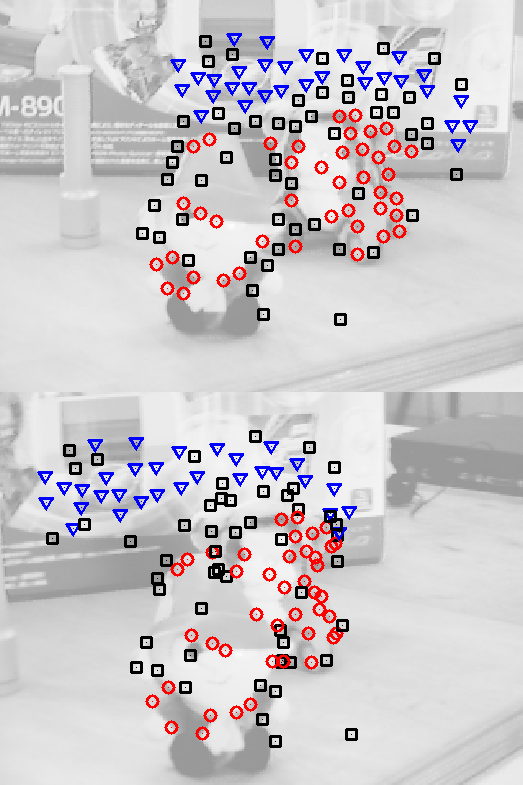

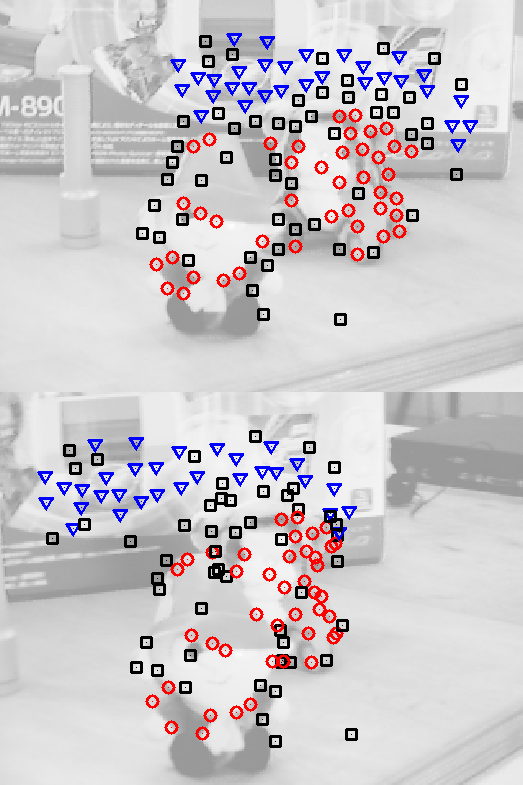

Robust 3D natural gesture recognition for wearable

Android platforms.

As the first employee and part of the founding team, I

served various functions at Atheer. My primary

responsibilities were developing 3D sensing and augmented

reality algorithms for Atheer's wearable 3D platform. My

team developed a real-time 3D natural gesture recognition

algorithm on ARM-based Android platforms that was regarded

as the best mobile gesture recognition solution. Our

proprietary augmented reality algorithms provided

industry-leading low latency and accurate 3D localization

performance. In 2014, I also served as Acting COO overseeing

the overall operation of the company.

Video Demo: https://www.youtube.com/watch?v=Rp6iawf0Dgk

Atheer Labs is features in the following reports:

|

|

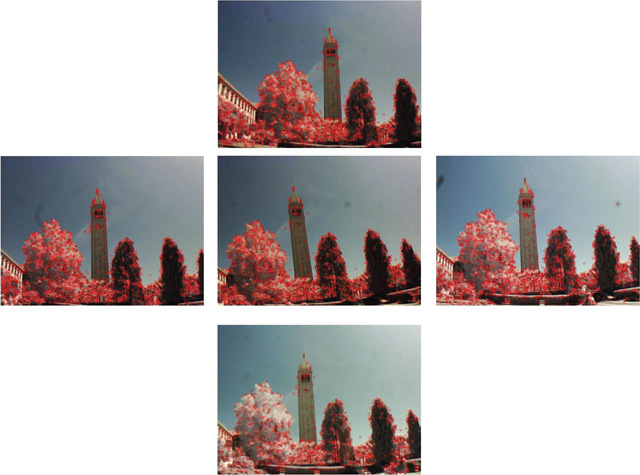

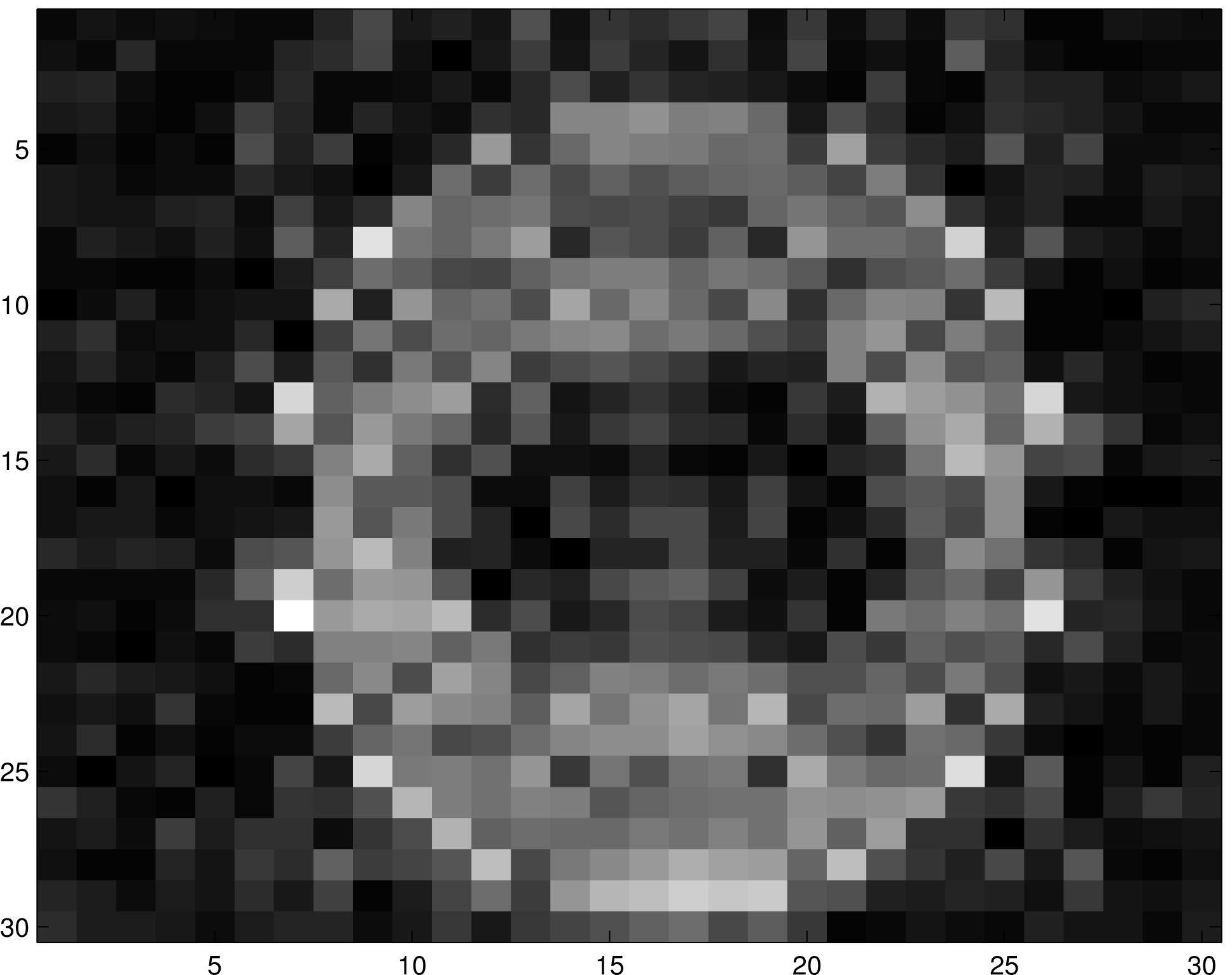

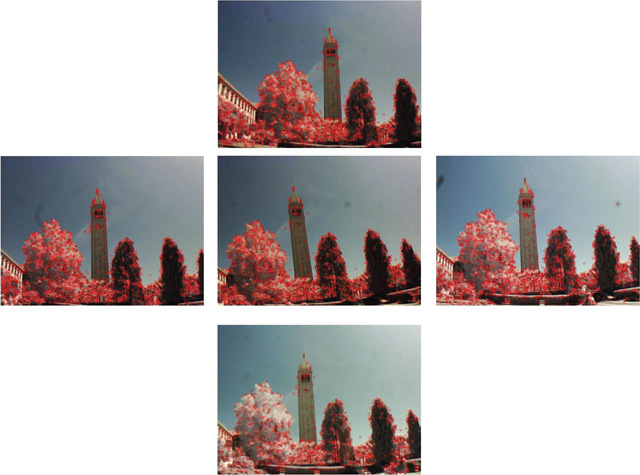

Large-scale

3-D Reconstruction of Urban Scenes via Low-Rank Textures

We introduce a new approach to reconstruct

accurate camera geometry and 3-D models for urban

structures in a holistic fashion without relying on

extraction of matching of traditional local features

such as points and edges. Instead, the new method relies

on a new set of semi-grlobal or global features called transform invariant

low-rank texture (TILT), which are ubiquitous

in urban scenes. Modern high-dimensional optimization

techniques enable us to accurately and robustly recover

precise and consistent camera calibration and scene

geometry from a single or multiple images of the scene. |

|

CPRL:

An Extension of Compressive Sensing to the Phase

Retrieval Problem

This paper

presents a novel extension of CS to the phase

retrieval problem, where intensity measurements of a

linear system are used to recover a complex sparse

signal. We propose a novel solution using a lifting

technique -- CPRL, which relaxes the NP-hard problem to a nonsmooth

semidefinite program. Our analysis shows that CPRL

inherits many desirable properties from CS, such as

guarantees for exact recovery. We further provide

scalable numerical solvers to accelerate its

implementation.

Matlab Code: http://users.isy.liu.se/rt/ohlsson/code.html

arXiv Tech Report: http://arxiv.org/pdf/1111.6323.pdf

|

|

L-1 Minimization via

Augmented Lagrangian Methods and Benchmark

We provide a comprehensive review of five

representative approaches, namely, Gradient Projection,

Homotopy, Iterative Shrinkage-Thresholding, Proximal

Gradient, and Augmented Lagrangian Methods. The work is

intended to fill in a gap in the existing literature to

systematically benchmark the performance of these

algorithms using a consistent experimental setting. In

particular, the paper will focus on a recently proposed

face recognition algorithm, where a sparse representation

framework has been used to recover human identities from

facial images that may be affected by illumination,

occlusion, and facial disguise. |

|

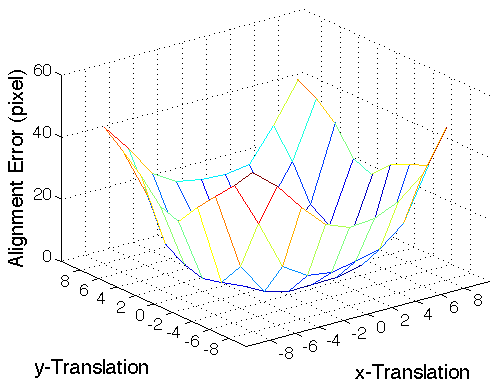

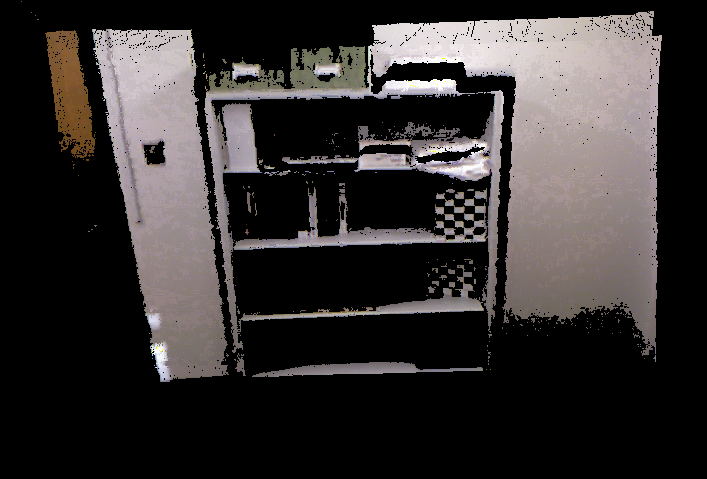

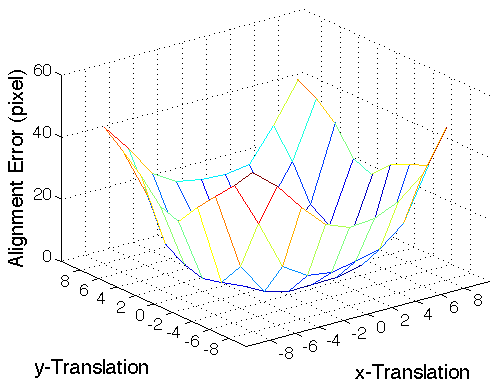

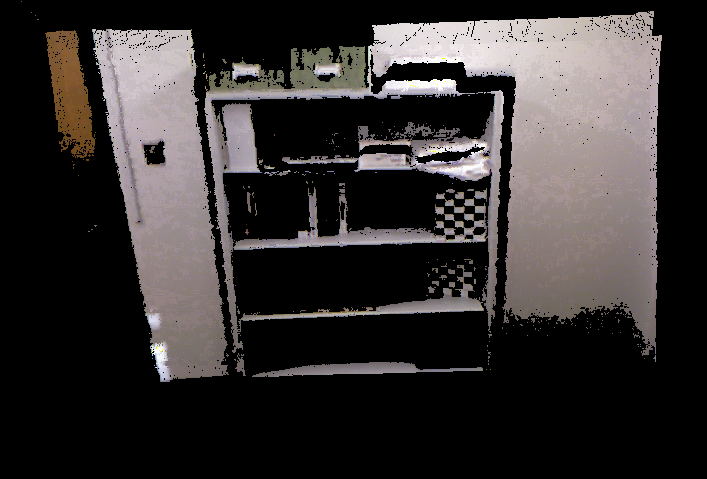

SOLO:

Sparse Online Low-Rank Projection and Outlier

Rejection

Motivated

by

an emerging theory of robust low-rank matrix

representation, we introduce a novel solution for

online rigid-body motion registration. The goal is to

develop algorithmic techniques that enable a robust,

real-time motion registration solution suitable for

low-cost, portable 3-D camera devices. The accuracy of

the solution is validated through extensive simulation

and a real-world experiment, while the system enjoys

one to two orders of magnitude speed-up compared to

well-established RANSAC solutions.

|

|

Sparse PCA via Augmented

Lagrangian Methods and Application to Informaitve

Feature Selection

We propose a novel method to select informative object

features using a more efficient algorithm called Sparse

PCA. First, we show that using a large-scale multiple-view

object database, informative features can be reliably

identified from a high-dimensional visual dictionary by

applying Sparse PCA on the histograms of each object

category. Our experiment shows that the new algorithm

improves recognition accuracy compared to the traditional

BoW methods and SfM methods. Second, we present a new

solution to Sparse PCA as a semidefinite programming

problem using the Augmented Lagrangian Method.

Source code in MATLAB: http://www.eecs.berkeley.edu/~yang/software/SPCA/SPCA_ALM.zip |

|

d-Oracle: Distributed

Object Recognition via a Camera Wireless Net

Harnessing the multiple-view information from a wireless

camera sensor network to improve the recognition of

objects or actions.

Berkeley Multiview

Wireless (BMW) database now available! |

|

d-WAR: Distributed Wearable Action Recognition

We propose a distributed recognition method to classify

human actions using a low-bandwidth wearable motion sensor

network. Given a set of pre-segmented motion sequences as

training examples, the algorithm simultaneously segments

and classifies human actions, and it also rejects outlying

actions that are not in the training set. The

classification is distributedly operated on individual

sensor nodes and a base station computer. Using up to

eight body sensors, the algorithm achieves

state-of-the-art 98.8% accuracy on a set of 12 action

categories. We further demonstrate that the recognition

precision only decreases gracefully using smaller subsets

of sensors, which validates the robustness of the

distributed framework.

Wearable Action

Recognition Database (WARD) ver 1.0 available for

download. |

|

Image

Analysis and Segmentation via Lossy Data Compression

We cast natural-image segmentation as a problem of

clustering texure features as multivariate mixed data. We

model the distribution of the texture features using a

mixture of Gaussian distributions. Unlike most existing

clustering methods, we allow the mixture components to be

degenerate or nearly-degenerate. We contend that this

assumption is particularly important for mid-level image

segmentation, where degeneracy is typically introduced by

using a common feature representation for different

textures in an image. We show that such a mixture

distribution can be effectively segmented by a simple

agglomerative clustering algorithm derived from a lossy

data compression approach. |

|

Feature

Selection in Face Recognition: A Sparse Representation

Perspective

Formulating the problem of face recognition under the

emerging theory of compressed sensing, we examine the role

of feature selection/dimensionality reduction from the

perspective of sparse representation. Our experiments show

that if sparsity in the recognition problem is properly

harnessed, the choice of features is no longer critical.

What is critical is whether the number of features is

sufficient and whether the sparse representation is

correctly found. |

|

Robust Algebraic Segmentation of Mixed

Rigid-Body and Planar Motions in Two Views

We study segmentation of multiple rigidbody

motions in a 3-D dynamic scene under perspective camera

projection. Based on the well-known epipolar and

homography constraints between two views, we propose a

hybrid perspective constraint (HPC) to unify the

representation of rigid-body and planar motions. Given a

mixture of K hybrid perspective constraints, we propose an

algebraic process to partition image correspondences to

the individual 3-D motions, called Robust Algebraic

Segmentation (RAS). We conduct extensive simulations and

real experiments to validate the performance of the new

algorithm. The results demonstrate that RAS achieves

notably higher accuracy than most existing robust motion

segmentation methods, including random sample consensus

(RANSAC) and its variations. The implementation of the

algorithm is also two to three times faster than the

existing methods.We will make the implementation of the

algorithm and the benchmark scripts available on our

website.

|

|

Generalized

Principal Component Analysis (GPCA)

An algebraic framework for modeling and segmenting

mixed data using a union of subspaces, a.k.a. subspace

arrangements. Yet the statistical implementation of the

framework is robust to data noise and outliers.

|

|

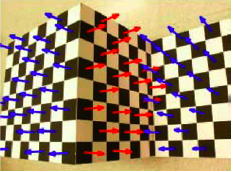

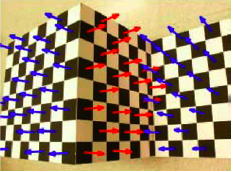

Symmetry-based

3-D Reconstruction from Perspective Images

We investigated

a unified framework to extract poses and structures of

2-D symmetric patterns from perspective images. The

framework uniformly encompasses all three fundamental

types of symmetry: Reflection, Rotation, and

Translation, based on a systematic study of the

homography groups in image induced by the symmetry

groups in space.

We claim the following principle: If a planar object

admits rich enough symmetry, no 3-D geometric

information is lost through perspective imaging.

|

|

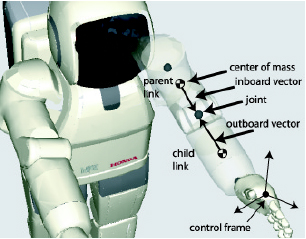

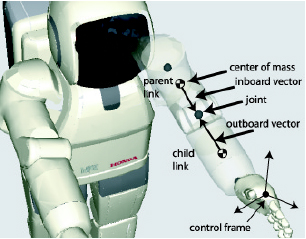

RoboTalk

A unified robot motion interface and tele-communication

protocols for controlling arms, bases, and androids.

Copyright (c) Honda Research, Mountain View, CA.

|