Debiased Learning from

Naturally Imbalanced Pseudo-Labels

Xudong Wang

Zhirong Wu

Lian Long

Stella Yu

UC Berkeley / ICSI Microsoft

[Preprint]

[PDF]

[Code]

[Citation]

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2022)

|

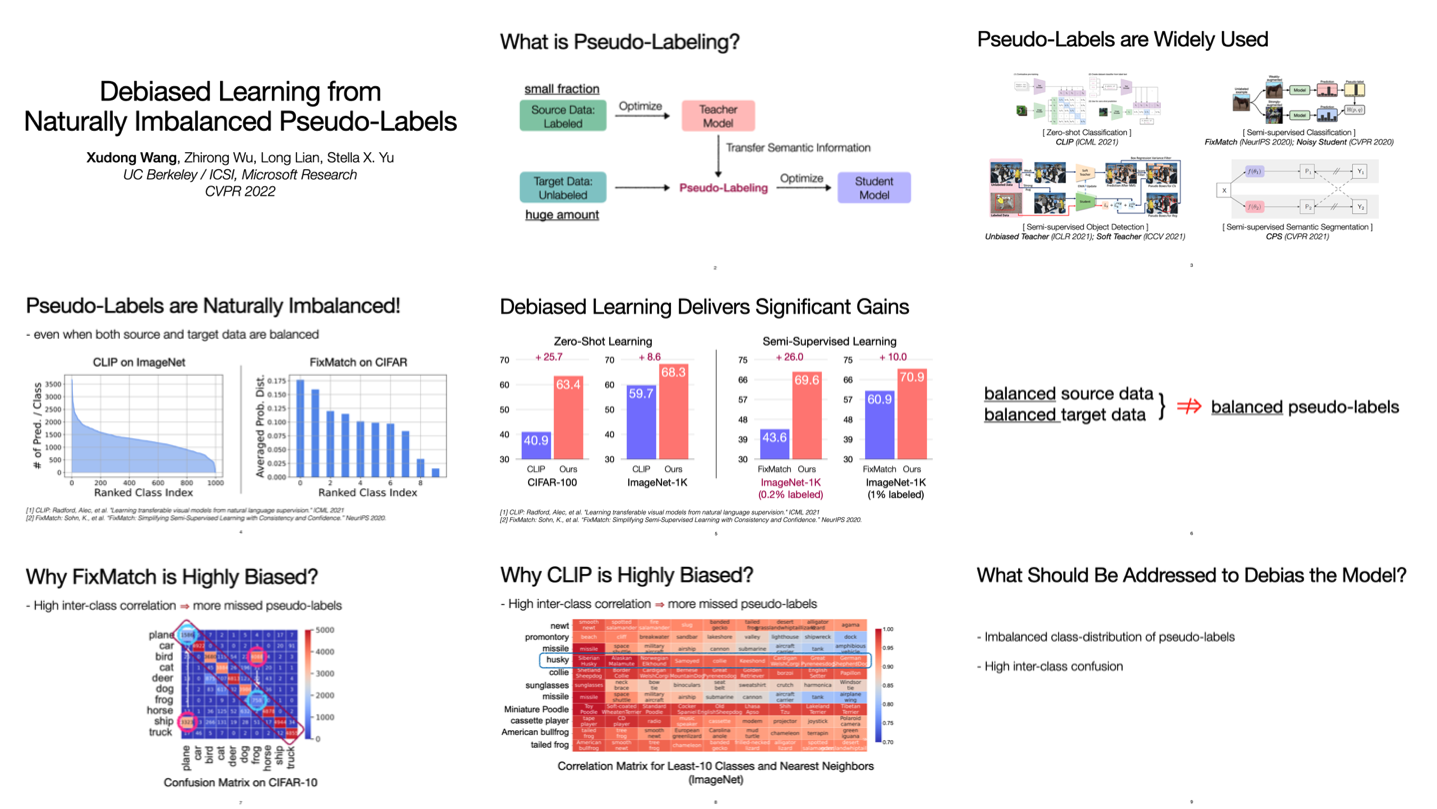

Pseudo-labels are confident predictions made on unlabeled target data by a classifier trained on labeled source data. They are widely used for adapting a model to unlabeled data, e.g., in a semi-supervised learning setting.

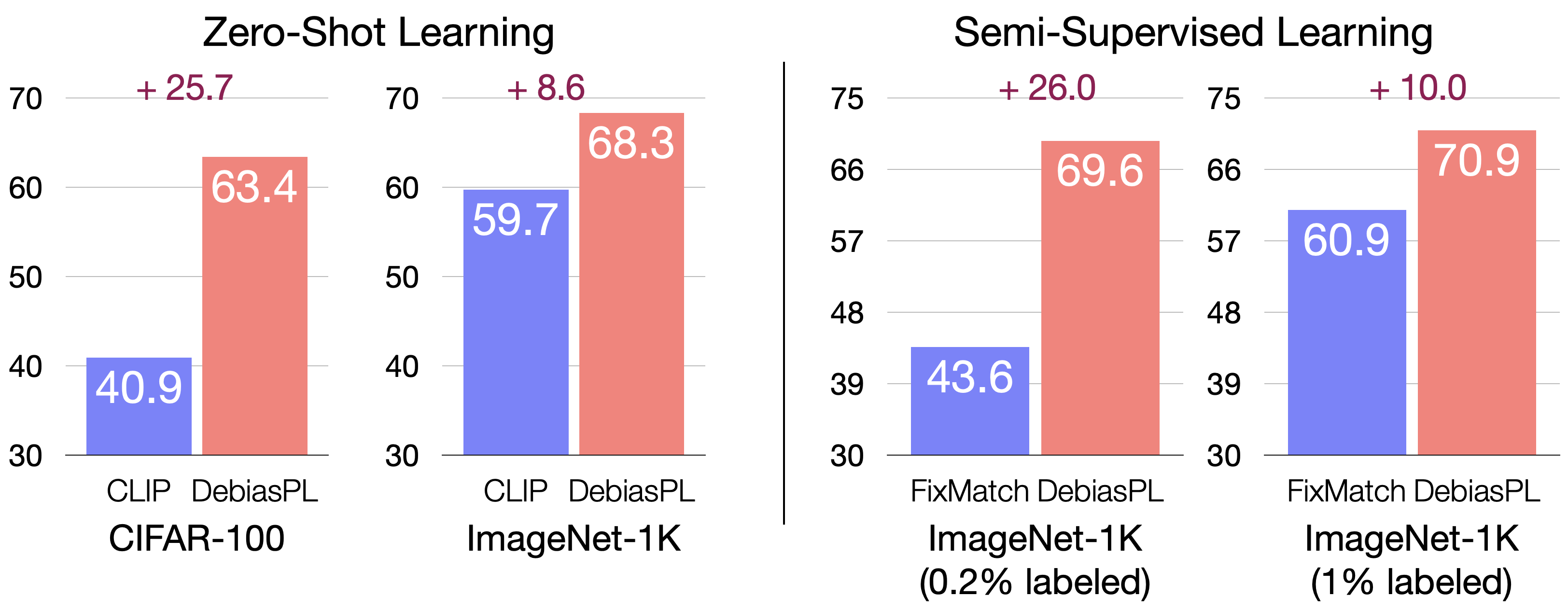

Our key insight is that pseudo-labels are naturally imbalanced due to intrinsic data similarity, even when a model is trained on balanced source data and evaluated on balanced target data. If we address this previously unknown imbalanced classification problem arising from pseudo-labels instead of ground-truth training labels, we could remove model biases towards false majorities created by pseudo-labels. We propose a novel and effective debiased learning method with pseudo-labels, based on counterfactual reasoning and adaptive margins: The former removes the classifier response bias, whereas the latter adjusts the margin of each class according to the imbalance of pseudo-labels. Validated by extensive experimentation, our simple debiased learning delivers significant accuracy gains over the state-of-the-art on ImageNet-1K: 26% for semi-supervised learning with 0.2% annotations and 9% for zero-shot learning. |

|

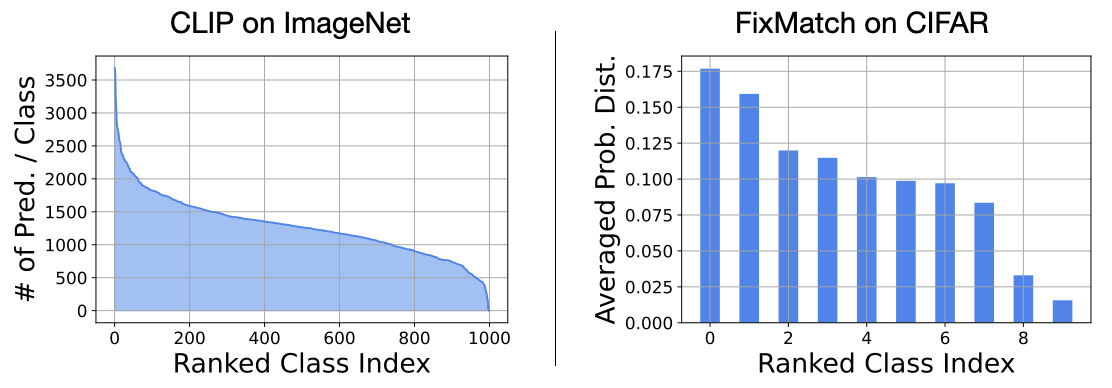

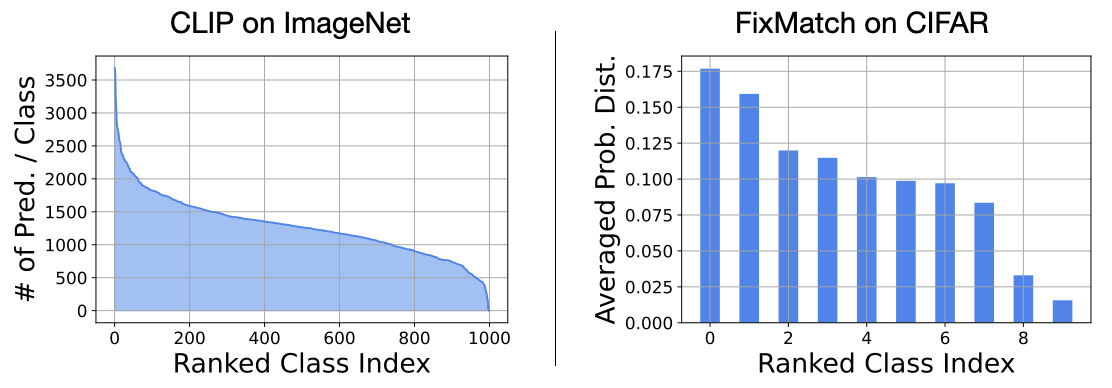

| Surprisingly, we find that pseudo-labels of target data produced by typical Semi-Supervised Learning (SSL) and transductive Zero-Shot Learning (ZSL) methods (i.e., FixMatch and CLIP) are highly biased, even when both source and target data are class-balanced or even sampled from the same domain. This imbalanced pseudo-label issue is not unique, and can be observed across almost all experimented datasets. Please check our paper for more details. |

|

| A simple yet effective method DebiasPL is proposed to dynamically alleviate biased pseudo-labels' influence on a student model, without leveraging any prior knowledge of true data distribution. We propose a novel and effective debiased learning method with pseudo-labels, based on counterfactual reasoning and adaptive margins: The former removes the classifier response bias, whereas the latter adjusts the margin of each class according to the imbalance of pseudo-labels. |

|

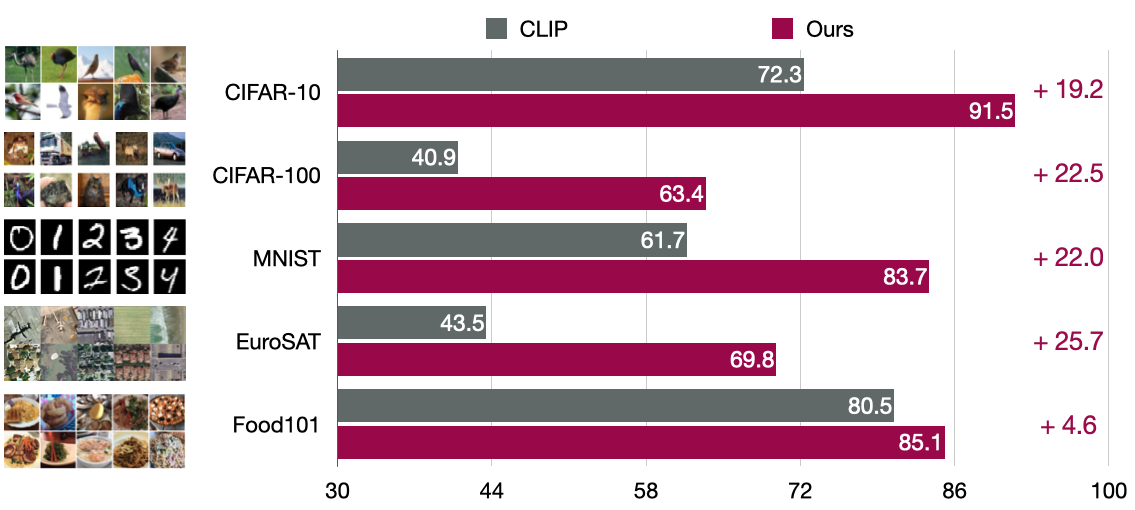

| As a universal add-on, DebiasPL delivers significantly better performance than previous state-of-the-arts on both semisupervised learning and transductive zero-shot learning tasks. Experimented on ImageNet: we deliver 26% performance gains to semi-supervised learning with 0.2% annotations and 9% for zero-shot learning. |

|

| For zero-shot learning, DebiasPL exhibits stronger robustness to domain shifts. For more results, please check our paper. |

|

|

|

| Paper | Slides |

|

If you find our work inspiring or use our codebase in your research, please cite our work:

@inproceedings{wang2022debiased, author={Wang, Xudong and Wu, Zhirong and Lian, Long and Yu, Stella X}, title={Debiased Learning from Naturally Imbalanced Pseudo-Labels}, booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, year={2022}, } |

| This work was supported, in part, by Berkeley Deep Drive. |