Federated Learning

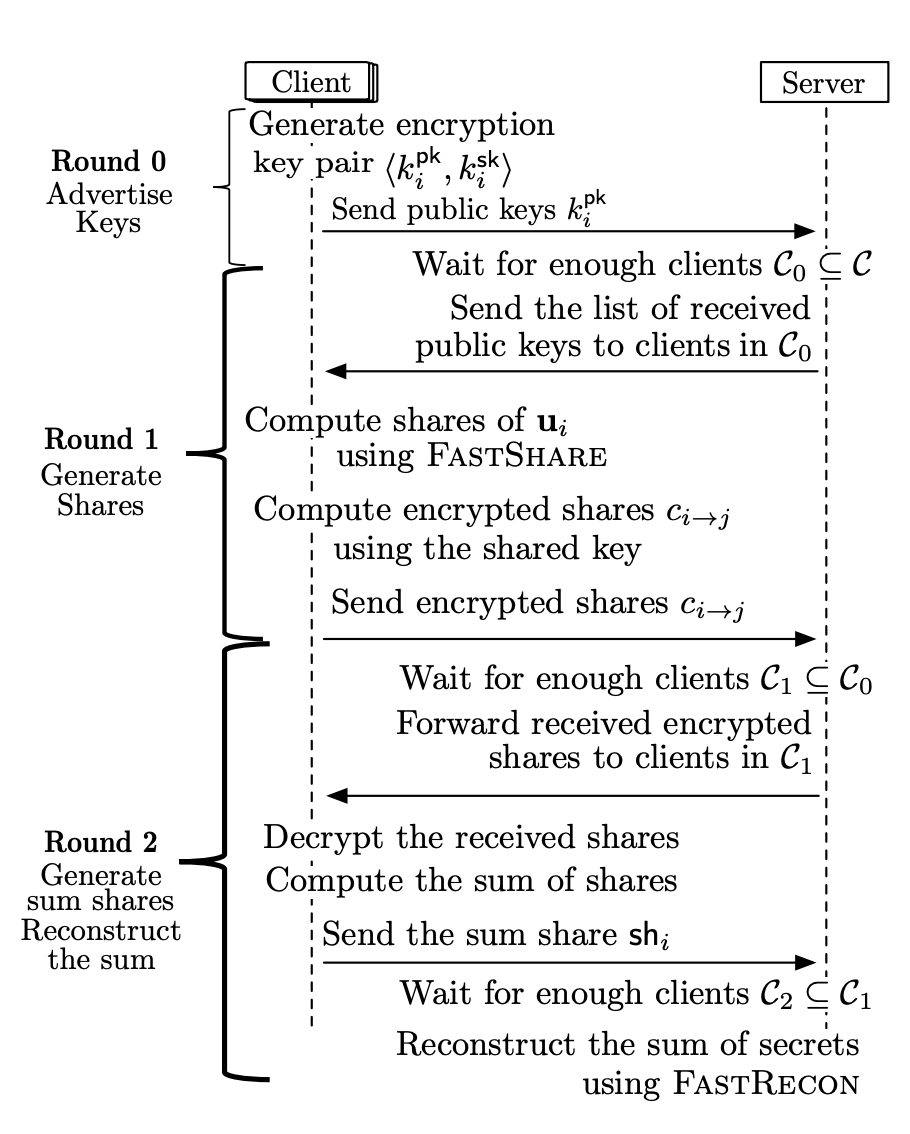

Federated learning is popular paradigm for decentralized learning in the presence of heterogeneous learning agents. This brings with itself the challenge of carrying out learning asynchronously in a computational and communication efficient manner, in the presence of unreliable agents. We propose a communication protocol called FastSecAgg for secure aggregation in the federated learning setting which is robust to unreliable clients in the form of dropouts or collusion. We theoretically show that FastSecAgg is secure against the server colluding with any 10% of the clients in the honest-but-curious model, while at the same time tolerating a dropout of a random 10% of the clients. These guarantees are achieved at a computation cost smaller than existing schemes at the same (orderwise) communication cost. The algorithm is also shown to be secure against adaptive adversaries who can perform client corruptions dynamically during the execution of the protocol.

Publications

A. Ghosh, J. Chung, D. Yin, K. Ramchandran, “An Efficient Framework for Clustered Federated Learning”, IEEE Transactions on Information Theory 2022.

Z. Zhang, Y. Yang, Z. Yao, Y. Yan, J. Gonzalez, K. Ramchandran, M. Mahoney, “Improving Semi-supervised Federated Learning by Reducing the Gradient Diversity of Models”, IEEE International Conference on Big Data 2021.

S. Kadhe, N. Rajaraman, O.O. Koyluoglu, K. Ramchandran, “FastSecAgg: Scalable Secure Aggregation for Privacy-Preserving Federated Learning”, arXiv 2020.