Recognizing Action at A Distance

A.A. Efros, A.C. Berg, G. Mori and J. Malik

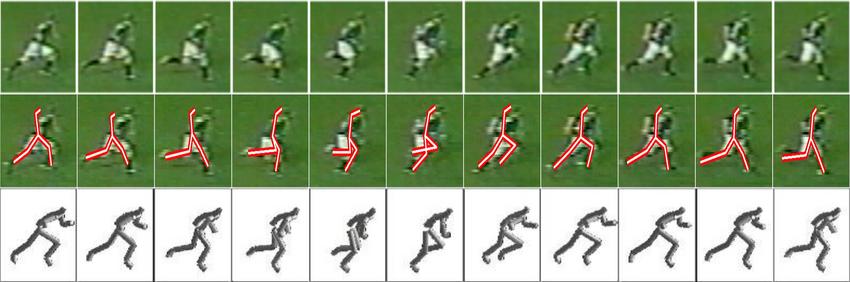

Our goal is to recognize human actions at a distance, at resolutions where a whole person may be, say, 30 pixels tall. We introduce a novel motion descriptor based on optical flow measurements in a spatio-temporal volume for each stabilized human figure, and an associated similarity measure to be used in a nearest-neighbor framework. Making use of noisy optical flow measurements is the key challenge, which is addressed by treating optical flow not as precise pixel displacements, but rather as a spatial pattern of noisy measurements which are carefully smoothed and aggregated to form our spatio-temporal motion descriptor. To classify the action being performed by a human figure in a query sequence, we retrieve nearest neighbor(s) from a database of stored, annotated video sequences. We can also use these retrieved exemplars to transfer 2D/3D skeletons onto the figures in the query sequence, as well as two forms of data-based action synthesis ``Do as I Do'' and ``Do as I Say''. Results are demonstrated on ballet, tennis as well as football datasets.

Here are some of the images from the paper, plus some more images and videos (DivX required) that didn't make it into the printed version.

best-match.avi: Video showing the best

motion descriptor matches for a short running sequence (see Figure 7

in paper).

player-match.avi: Video showing the best

matches drawn from a single player (no smoothing!)

GregWorldCup.avi: Greg in the

World Cup (38 MB!) -- an extended "Do as I Do" action synthesis

and retargetting example.

daid-tennis.avi: "Do as I Do" video

for tennis sequence (top: "driver", bottom:"target"). Although the

positions of the two figures are different, their actions are

matched.