Preparing for PhD Job Interviews

Prepare for ML/CV interviews as a fresh PhD grad.

I applied during the peak time of COVID-19, probably one of the worst

times to search for a job. The positive side is that I had more time

preparing for the interviews, and have revisited concepts that I

rarely used in my research but ended up giving me more intuition. It's

very different to think about basic concepts when we have already

built years of insights / done research in certain areas, we may

extract more juice and inspirations from the most simple things. I

think of this as a reverse learning process.

Throughout this one-month dedicated preparation, I have read articles

that I found so helpful but also ones repetitive. I hope the following

curated list of resources and concepts could save some of your time

navigating the noisy internet and direct you to more thoughtfully

created contents.

In particular, I'd focus on research interviews in computer vision and machine

learning, and briefly on coding interviews.

Computer Vision Interviews

We should expect the questions to be all over what we've learned in "Intro to computer vision" or "Computational photography". If we've only done research in 2D, we should also know epipolar geometry. If we work in recognition or detection, we should also know noise types in a camera sensor. Most of these will be low-level image processing and classic (aka. non-deep) algorithms.

Basic concepts

for each of the following, we should be able to explain what it is (2-3 sentences), under what context it is used.

- - ISP, Image processing pipeline (incl. its individual each step, i.e. denoising, demosaicing, white balance, compression, etc.)

- - Camera sensor noise types and their distributions (read / thermal noise, shot noise, fixed pattern noise, dark current noise)

- - SNR: definition, how is it related to ISO, exposure time and aperture

- - Image filtering: what to use for denoising / sharpening, antialiasing, bilateral filter

- - Image sampling: nyquist frequency, fourier analysis, how is it related to camera PSF

- - The thin lens model: what are the assumptions, basic illustration and ray properties, how to draw circle of confusion, where is depth of field/focus, etc.

- - Lens aberration (i.e. field-curvature, chromatic aberration)

- - Panoramic image stitching (from feature detection, matching to blending, also various feature detection and matching algorithms)

- - Plenoptic function: what are the parameters, how is it used in a light field camera

- - Camera response curve

- - Geometric transformations: homogeneous coordinates, various transformations with different degree of freedom (e.g. affine, rigid, homography, etc.), what's the use case for each and what are the constraints / assumptions

- - Structure from motion: algorithm pipeline (also epipolar geometry, fundamental and essential matrices)

- - Photometric stereo: algorithm pipeline

- - Comp Photo by Marc Levoy and Fredo Durand:

don't miss out this particular session taught by the people

who know computational photography and image processing so well

- - These flash applets use interactive ways to explain intriguing and confusing concepts

- - Computational imaging course by Gordon wetzstein: useful slides related to camera sensor and ISP

- - Image noise explained

- - Cambridge colour tutorials: one of my favorite and go-to websites when I want to get intuition for technical concepts

- - These flash applets use interactive ways to explain intriguing and confusing concepts

Derivations

for the following, it'd be helpful to write out the derivation, or code things up

- - HDR imaging: multi-exposure fusion to recover the camera radiance map and tone map

- - Image histogram equalization

- - RANSAC: outlier-robust model fitting

- - Image histogram equilization

- - Fundamental matrix and essential matrix derivation

- - Camera depth of field calculation

Machine Learning Interviews

for each of the following, we should be able to explain what it is (2-3 sentences), under what context it is used.

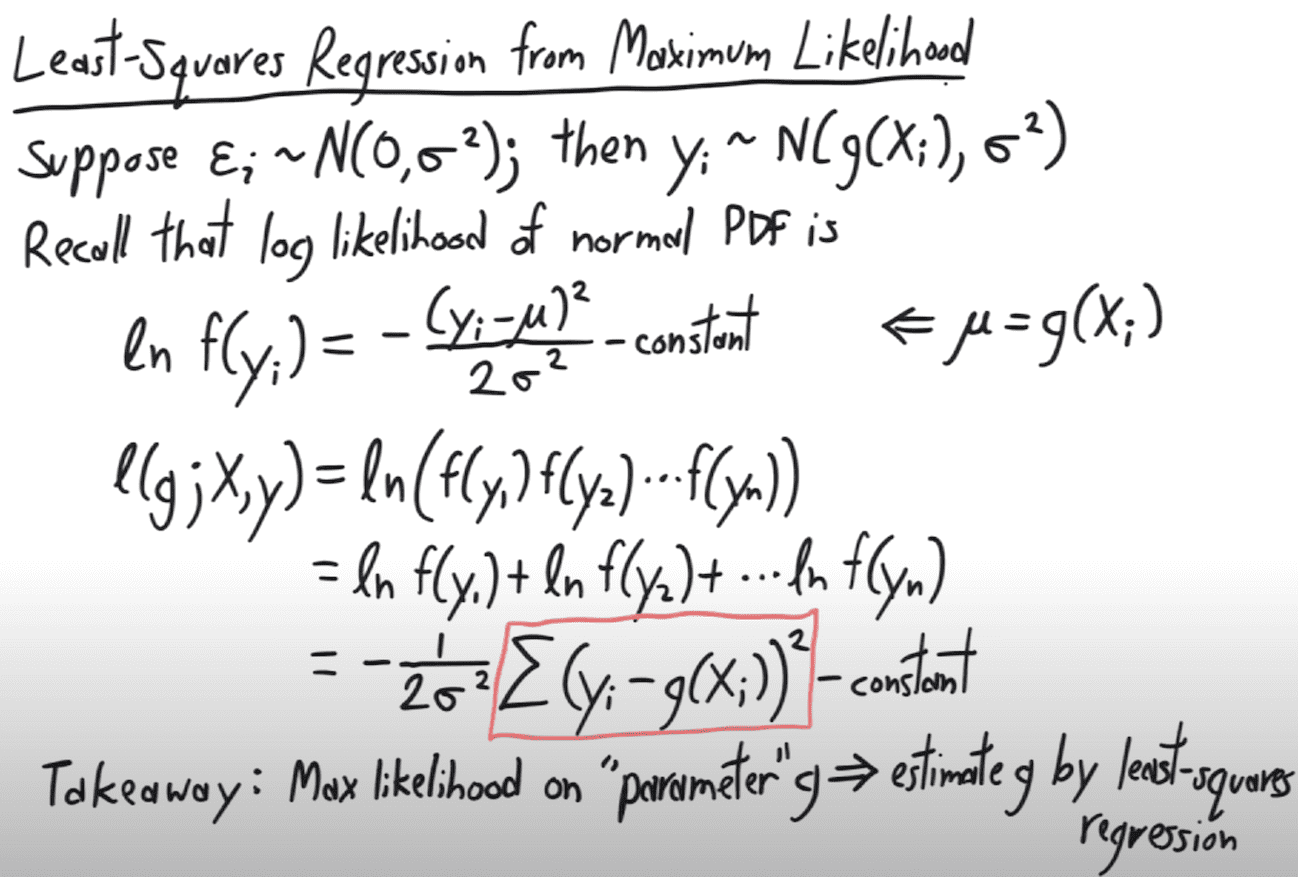

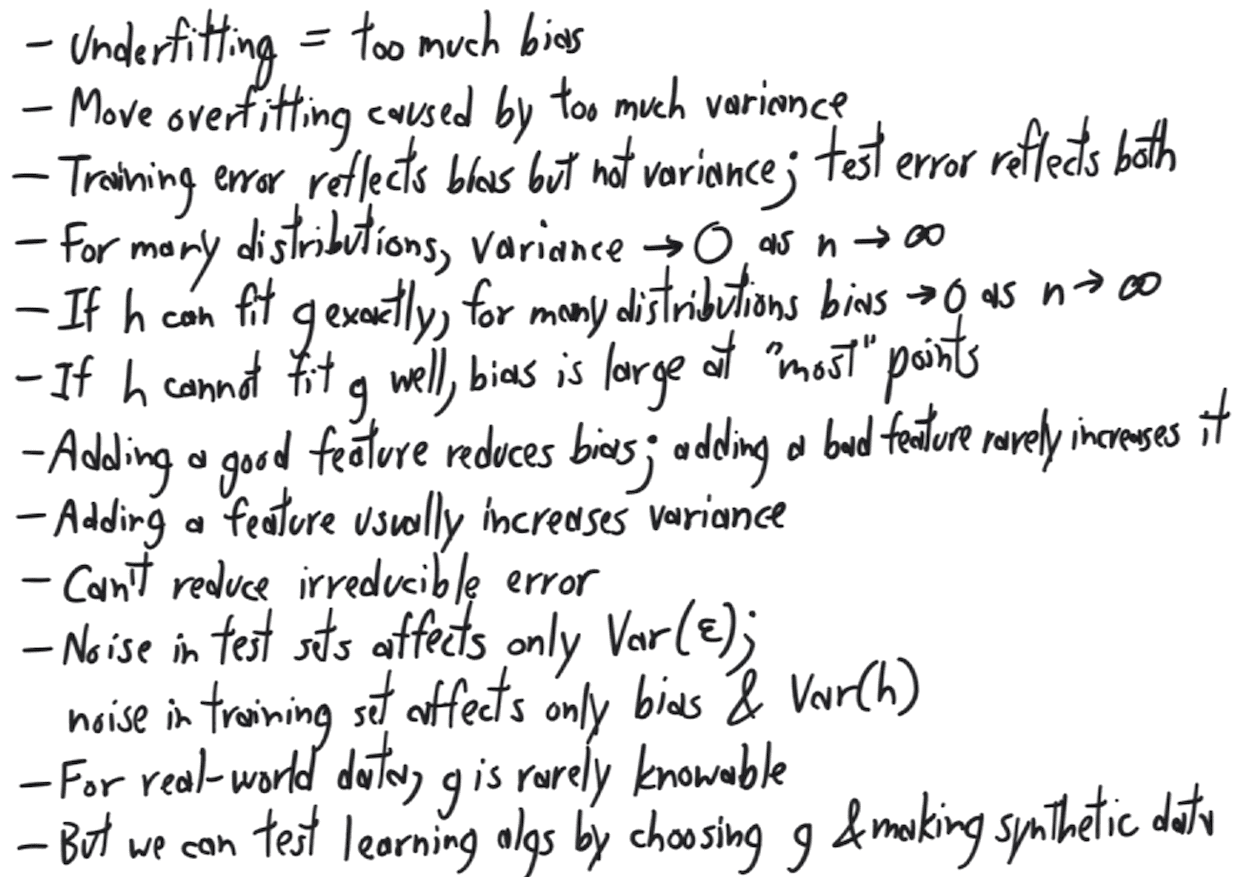

- - Likelihood and probability

- - Bias and variance: resource

- - Naive bayes: what are the assumptions and use cases

- - Random forest: related concepts like bagging

and boosting (additional resources)

- - Discriminative model and generative model: what are common algorithms for each

- - SVM and kernel SVM: resource, examples of kernels, what are the pros and cons for each kernel; why Lagrange multiplier works

- - Linear regression: also ridge and lasso regression

- - Data dimensionality reduction: different modalities like images and texts, curse of dimensionality

- - Correlation and covariance: resource

- - Various gradient descent methods: challenges / solutions for different methods

- - Various optimization methods: pros and cons

- - Relationship between SVD and PCA: relavant concepts including matrix singularity and symmetry, positive and semi-positive matrices, matrix determinant, eigen decomposition, also how PCA is related to data whitening

- - CS189 lecture recording: Jonathan Shewchuk is one of the best professors at Berkeley who explain both simple and hard things clearly and writes the best teaching notes. I attached two screenshots of Jonathan's iPad noteboot for his lecture below.

- - ML concepts explained

- - For deep stuff, watch the CS231 recording

- - Some basic linear algebra revisit: vector derivatives

Derivations

for the following, it'd be helpful to write out the derivation, or code things up

- - PCA and SVD: lecture 21 of CS189

- - Normal equation derivation

- - Newton’s method

Jonathan Shewchuk's notes during CS189 (Spring 2020) lecture

Coding Interviews

Last but not least, though definitely my least favorite, is coding, or

with a flowery name 'technical interview'. It's not inspiring at all,

but we still need to prepare for it.

I grouped up a few topics here in my git repo, including greedy

algorithm, pernutation / power set and tree-related problems.

Interview is a bi-directional selection process. You are looking for a team that is excited about you, in the same way you feel excited about them. In the end there's no 'optimal' choice, it's always the one that you feel the click. Best of luck!